What is sora: 7 reasons OpenAI text to video is the best

What is Sora: 7 Reasons OpenAI Text to Video

Remember when creating a professional video required a film crew, expensive equipment, and weeks of editing? What if you could generate a stunning, minute-long video from a single sentence? This is the paradigm shift Sora, OpenAI’s groundbreaking text-to-video model, brings to the table. As the digital content landscape explodes, with a projected 82% of all consumer internet traffic coming from video by 2025, the demand for scalable, high-quality video production has never been higher.

Understanding what is Sora is no longer a niche curiosity; it’s a strategic imperative for creators, marketers, and businesses aiming to stay ahead. This article will demystify Sora and give you seven undeniable reasons why its approach to OpenAI text to video is poised to dominate the creative world.

What is Sora? The Core Technology Unveiled

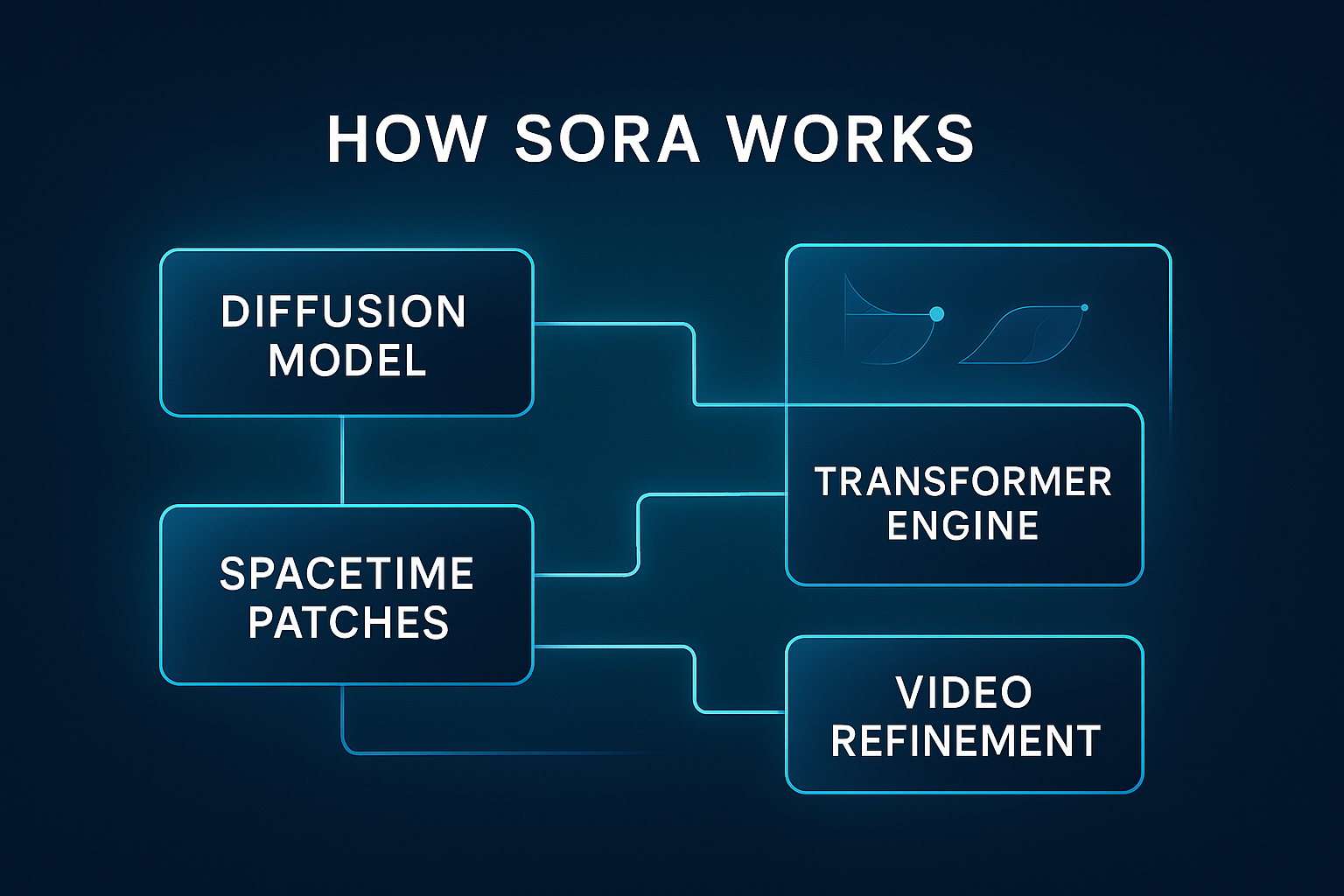

At its heart, Sora is a diffusion transformer model—a sophisticated AI architecture that transforms text prompts into realistic and imaginative video scenes. It represents a monumental leap in generative AI, moving beyond static images to dynamic, temporal storytelling. To grasp what is Sora and its capabilities, let’s break down its core technological components:

- Diffusion Model Foundation: Sora starts with a frame of static noise and iteratively refines it, step-by-step, removing the noise to reveal a coherent video that matches the text description. This process allows for the generation of highly detailed and varied visuals.

- Transformer Architecture: Like the powerful engines behind GPT-4, transformers give Sora a deep, semantic understanding of language. It doesn’t just match keywords; it interprets context, emotion, and complex instructions to ensure the generated video is logically consistent.

- Spacetime Patches: This is Sora’s secret sauce. Instead of processing entire frames, it breaks down videos into small patches of both space and time. This allows the model to understand and generate motion, object permanence, and long-range dependencies, resulting in remarkably smooth and coherent videos.

- Scalable Training on Diverse Data: Sora was trained on a massive, diverse dataset of videos and images, enabling it to understand a vast range of styles, subjects, and cinematic techniques.

The Generative AI Evolution: Sora’s Place in the Timeline

The advent of Sora didn’t happen in a vacuum. It’s the culmination of a rapid evolutionary timeline in generative AI. To appreciate the significance of what is Sora, we must contextualize its arrival.

- 2022-2023 (The Image Revolution): Models like DALL-E 2 and Midjourney mastered high-quality static image generation, setting a new standard for AI creativity and proving the viability of diffusion models.

- Late 2023 – Early 2024 (The Video Prototypes): Early text-to-video models from other labs emerged, capable of generating short, often low-fidelity clips. They demonstrated potential but were limited by short duration, jittery motion, and logical inconsistencies.

- 2024 and Beyond (The Sora Era): Sora enters the scene, shattering previous limitations. With its ability to produce up to 60-second videos with multiple characters, specific types of motion, and accurate details of the subject and background, it represents a generational leap. Industry analysts project that by 2026, AI-generated video tools like Sora will be integrated into the workflows of over 35% of large creative agencies.

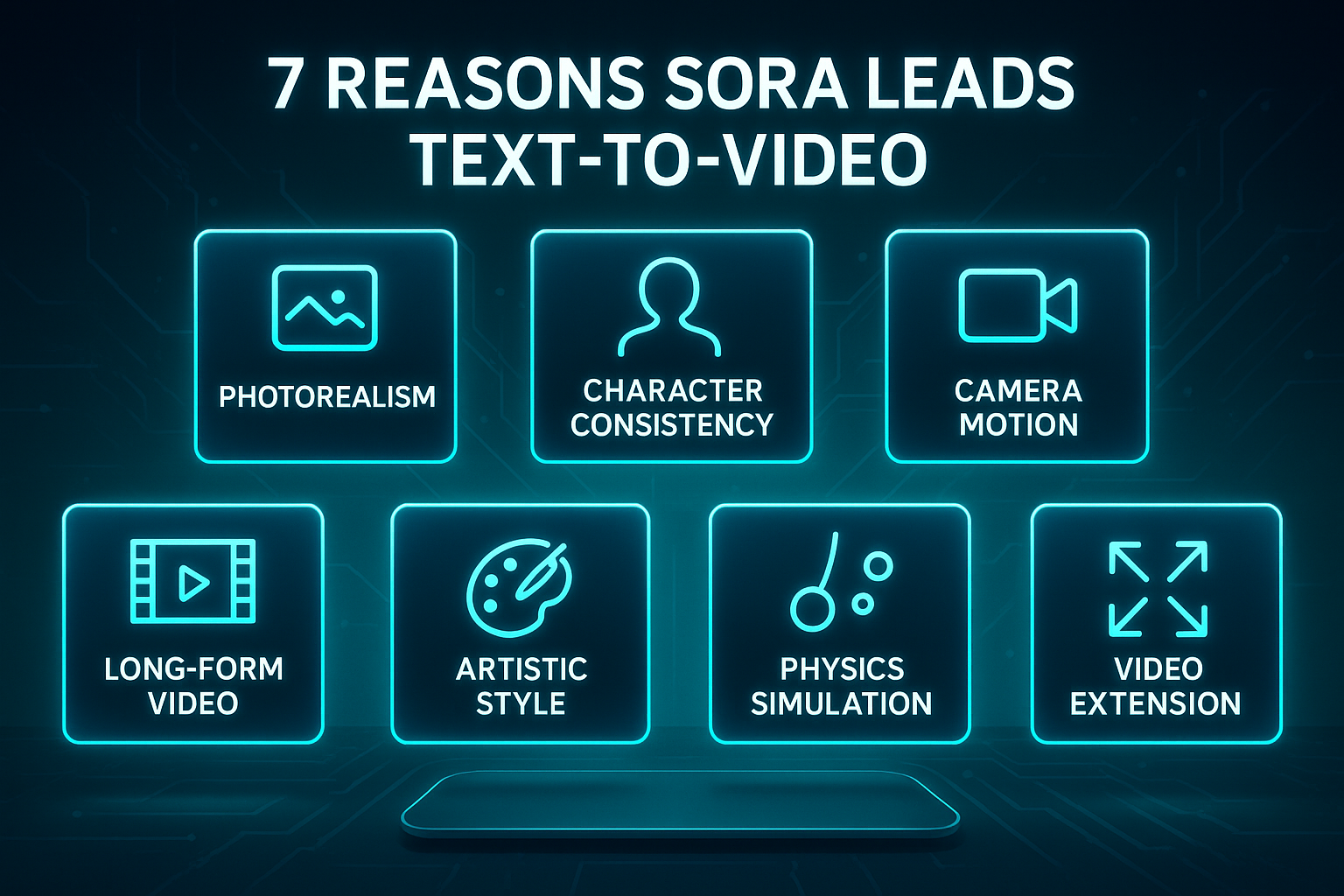

7 Reasons Why OpenAI Text to Video with Sora is the Best

1. Unprecedented Photorealism and Fidelity

The first thing that strikes you about Sora’s output is its stunning visual quality. Unlike its predecessors, which often had a distinct “AI-generated” look, Sora produces videos with a level of photorealism that can be indistinguishable from filmed footage. The lighting, textures, shadows, and reflections are rendered with astounding physical accuracy. When exploring what is Sora, this fidelity is its most immediate and compelling feature, making it suitable for professional use cases where quality is non-negotiable.

2. Complex Scene and Character Understanding

Sora excels at understanding not just objects, but entire scenes and narratives. It can generate videos featuring multiple characters, specific types of motion, and intricate backgrounds. More impressively, it maintains character consistency throughout the video—a feat earlier models struggled with. This deep semantic understanding means you can prompt for a “woman walking her dog in a neon-lit Tokyo street at night with rain-slicked pavement reflecting the signs,” and Sora will comprehend and render each element cohesively.

3. Coherent and Dynamic Camera Motion

A static shot is one thing; dynamic cinematography is another. Sora can simulate complex camera movements like dolly shots, pans, zooms, and tracking shots with smooth, realistic motion. This dynamic capability elevates the output from a simple animation to a piece of cinematic content, providing a powerful tool for storytelling that was previously exclusive to human cinematographers.

4. Long-Form Video Generation (Up to 60 Seconds)

While many early video models capped out at a few seconds, Sora’s ability to generate videos up to a full minute is a game-changer. This duration opens up possibilities for short advertisements, social media clips, educational explainers, and dreamlike sequences that require more time to develop, making the OpenAI text to video offering significantly more versatile.

5. Strong Composition and Artistic Style Adherence

Whether you’re aiming for the aesthetic of an Ansel Adams photograph or a vibrant anime style, Sora can not only replicate the style but also maintain strong visual composition. It understands concepts like the rule of thirds, leading lines, and color theory, resulting in outputs that are not just accurate, but aesthetically pleasing.

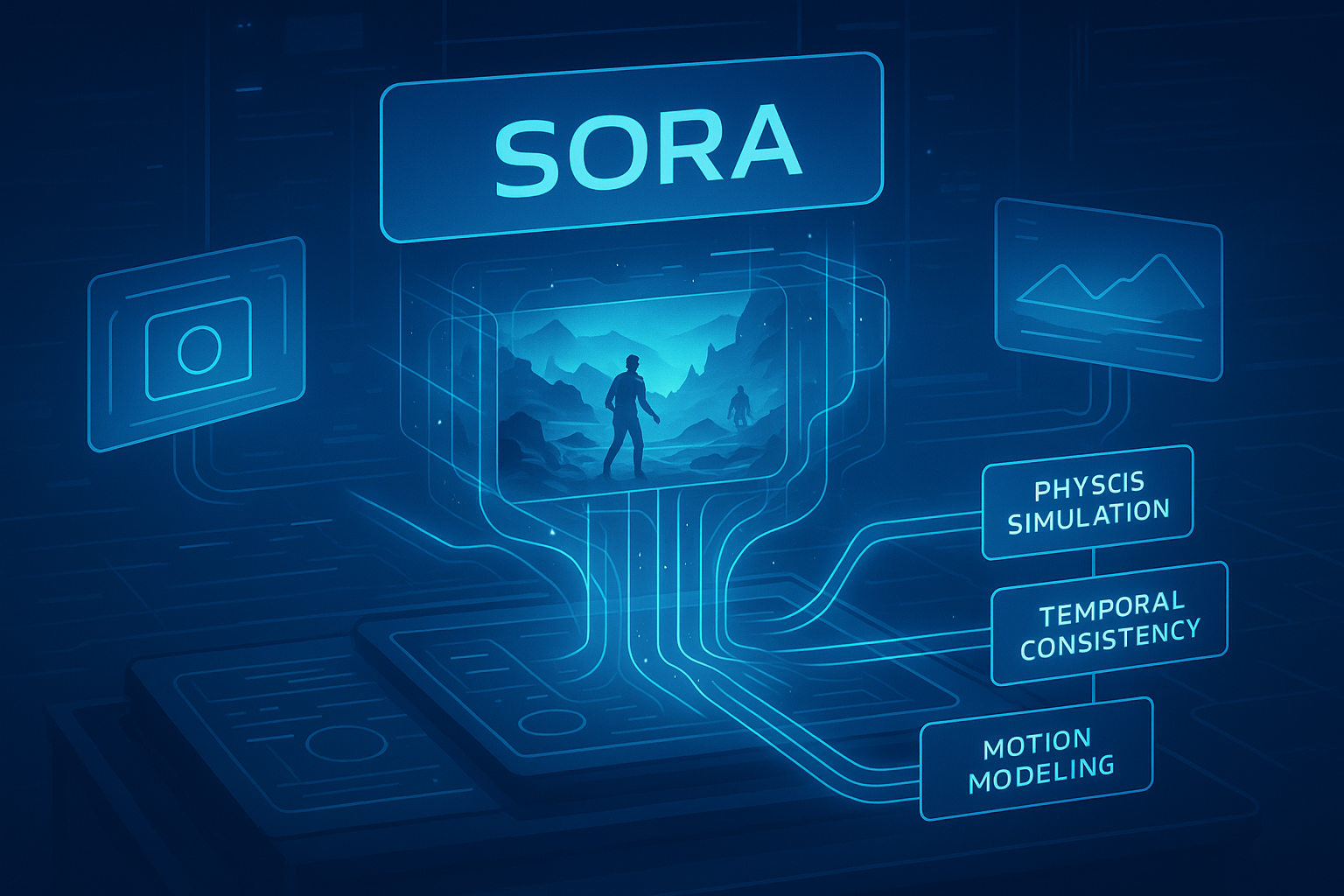

6. Emerging Simulation Capabilities and World Modeling

Perhaps the most futuristic aspect of what is Sora is its emergent ability to simulate aspects of the real world. It can render plausible physics, like a character taking a bite out of a burger and leaving a bite mark, or water creating realistic ripples. This suggests the model is building an internal representation of how our world works, a foundational step towards more general AI.

7. Seamless Video Extension and Inpainting

Beyond generating from scratch, Sora can take an existing video and extend it, either forward or backward in time. It can also perform video inpainting, seamlessly filling in missing frames or replacing objects within a scene. This makes it an invaluable tool for editing and enhancing existing footage, not just creating new content.

Quantifying the Impact: Performance Metrics for Creators

Adopting a tool like Sora isn’t just about cool tech; it’s about tangible business and creative impact. Here’s how the OpenAI text to video model delivers measurable value:

- Speed-to-Market: Reduce video production timelines from weeks to minutes. Concept-to-final-video cycles can be accelerated by over 90% for certain types of content.

- Cost Efficiency: Drastically lower production costs by minimizing or eliminating the need for actors, sets, and physical filming.

- Content Scalability: Generate hundreds of personalized video variants for A/B testing or targeted marketing campaigns at a fraction of the traditional cost.

- Creative Experimentation: The low marginal cost of generating new ideas fosters a culture of rapid experimentation, leading to more innovative and effective content.

Optimization Strategies for Superior Sora Prompts

To get the best results from Sora and truly leverage the power of OpenAI text to video, you must master the art of the prompt. Here are key strategies:

- Be Cinematically Specific: Don’t just describe the subject; describe the shot. Use terms like “close-up,” “low-angle shot,” “slow-motion,” or “cinematic lighting.”

- Incorporate Emotional and Stylistic Cues: Words like “serene,” “chaotic,” “nostalgic,” or “futuristic” guide the model’s tone.

- Reference Real-World Styles and Artists: Prompt with “in the style of Hayao Miyazaki” or “photographed like Steve McCurry” for more curated results.

- Iterate and Refine: Treat your first generation as a first draft. Analyze the output and refine your prompt to correct inconsistencies or enhance details.

Sora in Action: Real-World Use Cases and Applications

The versatility of Sora is evident in its wide range of applications across industries. When asking “what is Sora good for?”, the answers are expansive:

- Marketing & Advertising: Create hyper-personalized video ads, dynamic social media content, and compelling product demos without a production budget.

- Film & Entertainment: Rapidly prototype storyboards, create pre-visualization sequences, and generate visual effects assets.

- Education & E-Learning: Produce engaging educational videos and historical reenactments, and explain complex concepts with dynamic visuals.

- Architecture & Real Estate: Generate realistic fly-throughs of unbuilt architectural designs or stage empty properties with virtual furniture and decor.

Common Pitfalls and How to Avoid Them

As with any powerful technology, there are challenges. Being aware of them is the first step to mitigation.

- Pitfall 1: Unrealistic Physics. The model can sometimes generate videos where gravity, object interactions, or cause-and-effect are slightly off.

- Solution: Be explicit in your prompt about physical laws if precision is critical.

- Pitfall 2: Temporal Inconsistencies. Characters might change clothes or objects might teleport between cuts in longer generations.

- Solution: Use more specific character and object descriptions and experiment with shorter scenes.

- Pitfall 3: Over-reliance on AI. Using Sora as a complete replacement for human creativity rather than a tool to augment it.

- Solution: Develop a synergistic workflow where Sora handles heavy lifting on asset generation, and humans focus on direction, narrative, and final curation.

Maintaining and Scaling Your Sora Workflow

To future-proof your use of this OpenAI text to video technology, consider these best practices:

- Establish a Prompt Library: Build a curated database of your most effective prompts and their outputs to streamline future projects.

- Integrate with Post-Production: Use Sora-generated clips as base layers in professional editing software like Adobe Premiere Pro or DaVinci Resolve for color grading, sound design, and final compositing.

- Stay Updated on Model Iterations: OpenAI consistently improves its models. Stay informed about new features, longer durations, and enhanced capabilities to continuously elevate your output.

Conclusion

So, what is Sora? It’s more than just another AI tool; it’s a foundational shift in how we conceive and create visual media. Its combination of photorealism, complex scene understanding, and dynamic motion places it at the forefront of the OpenAI text to video revolution. The seven reasons outlined—from its unparalleled fidelity to its emerging world-modeling capabilities—demonstrate a clear technological lead. While still in development, Sora’s potential to democratize high-end video production and unlock new forms of creativity is undeniable. The future of content is dynamic, personalized, and AI-powered. The question is no longer what is Sora, but how will you use it to tell your story?

Ready to transform your content strategy? Start experimenting with detailed prompt engineering today and position yourself at the cutting edge of generative video. For more insights on AI tools, check out our guide on [internal link: The Future of Generative AI in Marketing].

Frequently Asked Questions (FAQs)

1. Is Sora available to the public yet?

As of now, Sora is in a limited red-teaming phase and available to a select group of visual artists and filmmakers for feedback. A public release date has not been announced.

2. How does Sora compare to other text-to-video models like Runway or Pika?

While other models are impressive, Sora currently demonstrates a significant lead in video duration (up to 60 seconds), coherence, and photorealism.

3. What are the ethical concerns surrounding Sora?

OpenAI is proactively addressing risks like misinformation and deepfakes by building tools to detect Sora-generated content and implementing strict safety protocols before a wider release.

4. Can I use Sora-generated videos for commercial purposes?

The final terms of service for commercial use will be clarified upon public release. It’s expected to follow a similar model to DALL-E, with users owning the rights to their generated content.

5. What kind of computing power is needed to run Sora?

Given its complexity, Sora will likely be accessible only via an API from OpenAI, requiring no local computational power from the user.

6. Does Sora have sound?

Currently, the model generates silent video. Sound design would need to be added in post-production.

7. Can I edit a Sora-generated video with another prompt?

Yes, one of Sora’s features is the ability to extend or edit existing videos using text prompts.

Share this content:

Post Comment