The Multiverse of AI: 5 Stunning Upgrades for Your Streaming

How will streaming change the way you watch, share, and discover content?

Multiverse of AI is more than a buzzword — it’s a clear, service-minded guide to how artificial intelligence shapes picture, sound, navigation, and shared experiences. In this blog, we frame a practical vision grounded in real examples you already know: Siri, Face ID, Amazon recommendations, and Unreal Engine. These show how AI powers everyday tools and transforms streaming today.

From voice discovery and dynamic watchlists to social co-viewing with lifelike avatars and immersive venues, each step reveals what is possible now and what to expect soon.

Expect a reassuring, expert tone throughout. This article helps you evaluate upgrades with confidence — not hype — through practical examples and clear next steps that focus on quality and reliability.

Key Takeaways

- See how current tools already blend into premium streaming experiences.

- Understand practical applications that improve discovery and co-viewing.

- Learn what is feasible now versus near-term innovation.

- Get service-oriented tips to optimize sound, picture, and navigation.

- Gain a pragmatic vision to plan upgrades without the hype.

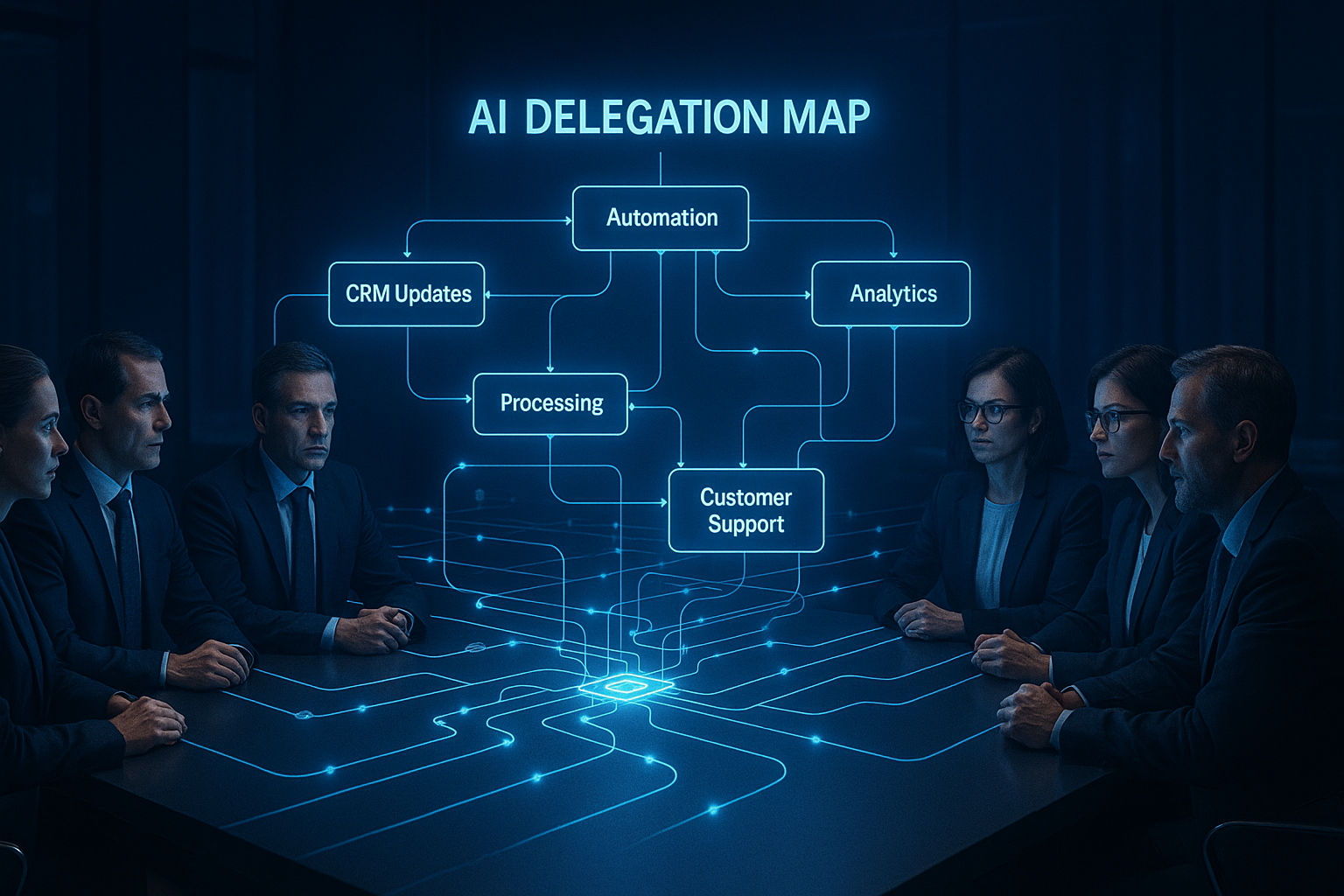

From Algorithms to Experiences: How AI Is Rewriting Streaming’s Future

Today’s streaming platforms are turning raw algorithms into fluent, user-first experiences. These shifts blend cloud services, device sensors, and smart models so you spend less time hunting and more time enjoying.

Understanding the building blocks helps make sense of practical changes.

Core technologies at work

Three disciplines power the shift: machine learning, computer vision, and natural language. Vision tracks scenes and objects. Machine learning predicts what you want next. Natural language lets you speak and be understood.

These tools already show up in everyday services—Siri, Face ID, and Amazon recommendations. Combined, they bridge the screen with the physical world in ways that feel effortless.

Why the future matters now

Development cycles use real user data to improve recommendations, skip/recap, and accessibility day after day. Small changes in release cadence create big gains in reliability and usability.

- Role clarity: vision for context, models for preference, language for control.

- Interactive systems let audiences shape pacing and perspective naturally.

- Practical future: voice-first search, contextual overlays, instant personalization.

When technology gets out of the way, streaming becomes fluid and service-minded—less searching, more discovering, and better comfort for every viewer.

Personalization Across Realities: NLP, Recommendations, and Conversational Assistants

Natural language lets viewers speak their intent and get instant, context-aware recommendations. This makes discovery faster and less frustrating. Systems hear a mood, actor, or scene request and return bite-sized, useful results.

Behind the scenes, NLU converts speech into intent. NLG crafts clear responses, summaries, and watchlists. Together they make assistants feel like expert concierges.

How it works in practice

- Use natural language processing to ask for genre, mood, or actor and receive context-aware tiles and snippets that match intent.

- End-to-end NLU-to-NLG pipelines auto-create watchlists, pull highlights, and generate quick episode summaries.

- Conversational assistants manage profiles, parental filters, and group preferences during live watch parties.

- Digital humans—advanced chatbots—host Q&As, moderate chats, translate in real time, and remember session history.

Lightweight learning loops refine suggestions without overwhelming you. Capability upgrades focus on clarity, trust, and transparent privacy controls.

| Feature | Benefit | Typical Application |

|---|---|---|

| NLU → Intent | Fast, accurate command parsing | Voice search, scene-level requests |

| NLG Responses | Concise summaries and confirmations | Episode recaps, watchlist creation |

| Conversational assistants | Concierge-level guidance | Profile management, group syncing |

| Digital humans | Immersive hosting and moderation | Live Q&A, translation, session memory |

Avatars, NPCs, and Social Viewing: Bridging the Physical World and Virtual Reality

Lifelike avatars and digital hosts turn solitary streaming into shared moments that feel real. Accurate avatar creation uses 2D and 3D scans to emulate expressions and body language. That fidelity makes reactions—laughter, surprise, a raised eyebrow—carry across distance.

![]()

Digital humans combine speech, computer vision, and NLU to act as assistants and event hosts. These NPCs welcome guests, spotlight speakers, and manage interactions so groups stay focused and included.

Identity integrity matters. Clear provenance checks, opt-in verification, and user alerts reduce fraud and deepfake risks. Moderation tools work in real time to discourage abuse without breaking immersion.

- Presence: computer vision captures micro-gestures for authentic social viewing.

- Comfort: easy controls for mute, expression privacy, and noise suppression.

- Support: NPC coaches guide new users and optimize picture and sound.

These concepts prioritize respectful interaction and reliable role clarity so your shared experiences feel safe, natural, and service-oriented.

Immersive Worlds On-Demand: Deep Learning for VR Scene Generation at Scale

On-demand virtual venues now let you enter a concert, stadium, or gallery in seconds. Deep learning with neural networks trains engines to generate full scenes fast. Companies like Nvidia, Meta, and DeepMind push models that need minimal human input to scale venues reliably.

Computer vision drives camera logic and overlays during live events. Smart cameras pick angles, show context-aware stats, and trigger instant replays without manual control.

Reinforcement learning creates a data flywheel. Each stream feeds models with crowd movement, gaze heatmaps, and latency metrics. Over time, rendering and comfort improve automatically.

- Practical applications: latency-aware simplification for lower-power computer hardware and richer textures on high-end systems.

- Capabilities: instant re-skins, personalized seating, and accessibility-focused views that match your preferences.

- Innovation: better lighting, occlusion handling, and scene physics that preserve creative intent.

| Component | Primary Benefit | Typical Application |

|---|---|---|

| Deep learning engines | Fast, scalable scene generation | Concerts, arenas, themed worlds |

| Computer vision | Adaptive camera angles | Live stats, replays, overlays |

| Reinforcement data flywheels | Continuous quality gains | Gaze-driven rendering, crowd tuning |

| Device-aware rendering | Balanced quality and performance | Mobile simplification, high-end fidelity |

The result is a reality layer that feels live and responsive, even at scale. You gain the ability to choose comfort and fidelity, while innovation keeps improving the experience.

Devices, Sensors, and Edge AI: Low-Latency Interaction Without the Cloud

On-device intelligence brings fast, reliable interaction without constant cloud ties. Local models cut latency and keep private control in the room. That makes streaming controls feel instant and dependable.

SuperFly (~94M parameters) and ChickBrain (3.2B) show what tiny, high-performing models can do. SuperFly targets tiny IoT endpoints for quick voice commands. ChickBrain delivers near-desktop language power on laptops and headsets.

On-device models for offline voice control

CompactifAI, a quantum-inspired compression algorithm, shrinks models while keeping accuracy. That increases capacity on limited hardware and saves battery life. Multiverse Computing offers these models via AWS and for direct device deployment. Talks with Apple, Samsung, Sony, and HP aim to bring this to consumer gear.

Smart HMDs and sensor-driven interaction

Headsets now use gaze, gesture, and bio-signals to predict movement and trigger voice navigation. Sensors read intent and adjust comfort modes or captions in real time. Algorithms fuse voice, hands, and head pose so controls stay simple for first-time users.

Compact models for in-room assistants

Edge-first design means processing happens on your device. Bandwidth drops and dropouts shrink. In a single min setup, your remote or HMD is ready. This is a fast min read deployment guide for practical edge intelligence.

“Edge processing makes the experience feel immediate, private, and reliable—exactly what premium streaming should deliver.”

| Component | Benefit | Typical Use |

|---|---|---|

| SuperFly (94M) | Tiny, low-latency voice | IoT remotes, simple commands |

| ChickBrain (3.2B) | Near-desktop language | Laptops, HMD assistants |

| CompactifAI | Higher capacity on-device | Battery-friendly deployments |

| Smart sensors | Natural interaction | Gaze, gesture, bio-signal control |

- Edge-first processing keeps control local and private.

- Machine intelligence at the edge guards performance when networks fluctuate.

- These advances link your device to the physical world with responsive, human-friendly controls.

multiverse of ai Storytelling: Branching Timelines, Conscious Simulations, and Audience Agency

Streaming narratives now include decision points that make each viewing a distinct creative journey. Branching timelines transform passive watching into co-authored stories. Choices alter reality layers and yield different endings on each journey.

Natural language processing lets you steer scenes by voice. Say a preference and the plot adapts without menus or pauses. This lowers friction and raises engagement.

Interactive narratives across alternate realities: viewers steering plots in real time

Designers can map branches so each decision spawns a new route. AI agents navigate those timelines and keep continuity for viewers.

Speculative horizons: simulated universes, transhuman interfaces, and expanded creativity

Conscious simulations act as a safe creative sandbox. Humans test ideas in a parallel realm before public release.

“Measured development keeps spectacle usable: clear signposting, guardrails, and rollback paths prevent confusion.”

- Replayability: fans compare routes and remix community lore.

- Ethics: be transparent about what’s simulated or persistent.

- Roadmap: pilot arcs, A/B narrative beats, then full convergence events.

This is a pragmatic future. Start small, measure engagement, then expand scope with confidence.

Conclusion

Here’s a concise plan to turn recent technical advances into better nights in front of the screen.

Artificial intelligence powers clear gains today: faster discovery, safer sharing, and smoother playback. Lean into language processing and natural language features to simplify search, summaries, and group coordination.

Pair machine learning with computer vision for smarter highlights and camera choices. Use on-device models like SuperFly and ChickBrain to cut latency and keep key data local.

Start small: pilot voice, refine recommendations, try a social watch room, then scale what helps. This blog’s course is practical—measure outcomes, iterate, and favor quality over spectacle.

Quick min read next steps: enable voice, tune suggestions, test a watch room, and consider edge upgrades for reliable evenings. Your ability to enjoy content should feel effortless and dependable.

FAQ

What technologies power smarter recommendations and search?

How does conversational assistance improve discovery?

Can avatars and digital hosts make co-watching feel more real?

Are AI-generated venues and scenes realistic for live events?

What role do edge devices and on-device models play?

Is interactive storytelling secure and controllable?

How do data flywheels improve streaming quality?

Will these technologies work on my existing devices?

How is user privacy handled with sensors and bio-signals?

What are the creative possibilities for storytellers?

Share this content:

Post Comment